Greedy to the Nth Degree

The greedy algorithm can naturally be extended to look more moves ahead when making the decision of which block color to remove next. We saw in the last post that to look beyond the current move, we had to modify the board-checking algorithm to mark matching blocks with the number of the move that was being searched. Then, each color could be used in the search and easily rolled back for the next color. In this way each color could be used in the search for the first move, and for each of those colors on the first move, each color is used in a search on the second move. If on each move we ignore the color that was used for the previous move, the first move will consist of 4 searches, and each of those searches will generate 4 additional searches for the second move, resulting in 16 searches of the board for the second move.

Because of how the blocks are marked with the move number, there's no need to stop at the second move. We can continue to look further ahead in moves, looking at another 4 colors on the next move for each color chosen on the current move. Looking ahead 3 moves would result in 64 third-move searches in addition to the first- and second-move searches for a total of 84 searches. Looking ahead 4 moves would add another 256 searches, for a total of 340 searches. You can see how quickly the number of searches increases with each additional move we look ahead. The greedy look-ahead algorithm experiences exponential growth in the number of searches, and that's going to limit the number of moves that we can reasonably look ahead.

We'll tuck that thought in the back of our mind for the moment because we need to modify the algorithm a bit to enable looking ahead more than 2 moves. First, we can add to the GUI so that we can specify how many moves we want to look ahead when running the algorithm. Adding a text input after the drop-down selection box will be sufficient, and I called this text input element solver_max_moves for the JavaScript code. The rest of the changes are all about using this new variable to control the depth of the search in the greedy look-ahead algorithm. First, we need to add the variable and initialize it when either the solver control button or solver batch mode run button is clicked:

function colorWalk()

// ...

function Solver() {

var that = this;

var iterations = 0;

var max_moves = 2;

this.index = 0;

this.init = function() {

this.solver = $('<div>', {

id: 'solver',

class: 'control btn',

style: 'background-color:' + colors[this.index]

}).on('click', function (e) {

max_moves = $('#solver_max_moves').val();

that.runAlgorithm();

}).appendTo('#solver_container');

// ...

$('#solver_play').on('click', function (e) {

iterations = $('#solver_iterations').val();

max_moves = $('#solver_max_moves').val();

that.run();

});

}; this.greedyLookAhead = function() {

var max_control = _.max(controls, function(control1) {

if (control1.checkGameBoard(1) === 0) {

return 0;

}

var matches = _.map(controls, function(control2) {

return control2.checkGameBoard(2);

})

return _.max(matches);

});

this.index = max_control.index;

} this.greedyLookAhead = function() {

var max_control = _.max(controls, function(control) {

if (control.checkGameBoard(1) === 0) {

return 0;

}

return greedyLookAheadN(2);

});

this.index = max_control.index;

}

function greedyLookAheadN(move) {

return _.max(_.map(controls, function(control) {

var matches = control.checkGameBoard(move);

if (matches === 0 || move >= max_moves) {

return matches;

}

return greedyLookAheadN(move + 1);

}));

}We can verify that the recursive function will terminate because each call to greedyLookAheadN() increments the move number so that it will eventually reach max_moves. If the board is cleared before it reaches max_moves, then there will be zero matches and the function returns without calling itself again, so it will terminate in that case as well. The correctness of this algorithm can be verified by comparing the statistical results of a batch run when max_moves = 2 to the previous version of greedyLookAhead(), and the results are indeed the same. Now we can explore what happens when we increase the search depth to 3:

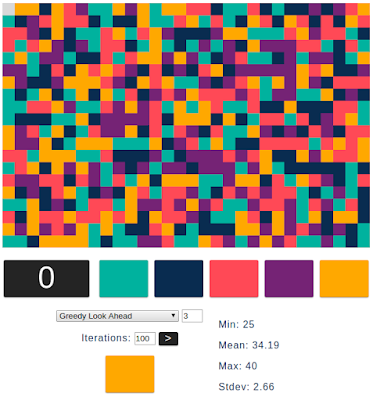

Once again the algorithm performance has improved by every statistical measure, as can be easily seen in the table of results:

| Algorithm | Min | Mean | Max | Stdev |

|---|---|---|---|---|

| Round Robin | 37 | 48.3 | 62 | 4.5 |

| RR with Skipping | 37 | 46.9 | 59 | 4.1 |

| Random Choice | 60 | 80.2 | 115 | 10.5 |

| Random with Skipping | 43 | 53.1 | 64 | 4.5 |

| Greedy | 31 | 39.8 | 48 | 3.5 |

| Greedy Look-Ahead-2 | 28 | 37.0 | 45 | 3.1 |

| Greedy Look-Ahead-3 | 25 | 34.2 | 40 | 2.7 |

However, we're definitely starting to pay a price. The algorithm is starting to run noticeably slower because of all of the searching it needs to do to look an extra move ahead. Setting the max_moves to 4 makes things even worse, resulting in a run time that's getting close to intolerable. The results for such a run of 100 iterations does still improve the performance, but only slightly:

We may have hit the point of real diminishing returns, but it's hard to tell without looking at least one more move ahead. Extending to a search depth of 5 is just not feasible, unless we're willing to wait an hour for the results. If we want to break that 5-move barrier, we have a couple options. One is to come up with a different algorithm that doesn't have exponential growth, or at least smaller exponential growth. We'll definitely get to that, but the move-search algorithm isn't the only algorithm in this program that could be optimized. We also have a matching color search algorithm that could be improved.

Improved Color Matching

We have finally reached the pain point of the slow color search routine in Control.checkGameBoard(), and it's time to look at how we can optimize it. First, let's review how the current search algorithm works for finding blocks of the selected color that are adjacent to the empty area of the board:

- All blocks on the board are scanned, even live ones.

- For each block, if it is dead, then its neighbors are inspected.

- If a neighbor matches the color being searched, and it's not dead, then it is marked dead.

- For any neighbor marked in step 3, the search is repeated with step 2 and its neighbors.

In order to get a better idea of how many block inspections we're talking about, we can instrument the code with a simple counter that keeps a tally of how many block inspections occur on each batch run:

function checkNeighbors(positions, color, check_move) {

_.each(positions, function (position) {

_block_inspect_counter += 1;

// ...

});

}Clearly, we are looking at a lot of blocks on every move, way more than we need to. More specifically, every time we search through the blocks on a new move, we are repeatedly finding the same color blocks that are adjacent to the blocks that are being marked. This repetition didn't really exist with the other algorithms, but with greedy look-ahead, we're looking at the same collections of blocks again and again with the same color choices, just in different orders each time. This duplication, along with mostly inspecting blocks that we don't need to, adds up to a ton of wasted effort.

In order to improve the efficiency of searching, we can modify the data structure for the board, and then use the new data structure to dramatically reduce the number of blocks that need to be inspected in the search algorithm. To enable this huge gain in efficiency, we're going to introduce the notion of a cluster of blocks. A cluster is a set of blocks that are all adjacent to each other and have the same color. Clusters can be found in a very similar way to how adjacent blocks of the same color were found in the old code when marking them. Each cluster will have a list of blocks that belong to it, and each of those blocks will have a parameter identifying which cluster it's in.

Once we have clusters, we need a good way to find clusters that are adjacent to any given cluster. These adjacent clusters are called neighbors, and each cluster will have an array of pointers to its neighbors. Every neighbor of a cluster A will have exactly one neighbor that is the original cluster A, so that the graph of clusters has all bi-directional links. This property is necessary because it's possible for blocks to be adjacent to the cleared blocks from any direction. We need to be able to reach clusters from any direction when searching the cluster graph.

Building the Cluster Graph

Now that we have this idea of a new and improved data structure, we need to build it from a board of individual blocks. We can start by adding a list of clusters to the game, creating a new cluster for the first block, and pushing it onto the list:

function makeBlocks() {

var x = 0;

var y = 0;

blocks = [];

clusters = [];

moves = 0;

// ...

_.each(_.range(grid_length * grid_height), function (num) {

// ...

});

clusters.push(new Cluster(blocks[0]));

}; function Cluster(block) {

this.blocks = [block];

this.neighbors = [];

block.cluster = this;

var that = this;

var cluster_neighbors = findClusterNeighbors(block);

createNeighboringClusters(cluster_neighbors); function findClusterNeighbors(block) {

var cluster_neighbors = getNeighbors(block);

var cluster_blocks = selectClusterBlocks(cluster_neighbors);

cluster_neighbors = _.difference(cluster_neighbors,

cluster_blocks);

while (cluster_blocks.length > 0) {

cluster_blocks = _.flatten(_.map(cluster_blocks, function(pos) {

that.blocks.push(blocks[pos]);

blocks[pos].cluster = that;

cluster_neighbors = _.union(cluster_neighbors,

getNeighbors(blocks[pos]));

return selectClusterBlocks(getNeighbors(blocks[pos]));

}));

cluster_neighbors = _.difference(cluster_neighbors,

cluster_blocks);

}

pos_blocks = _.map(that.blocks, function(b) { return b.position; });

return _.difference(cluster_neighbors, pos_blocks);

};With this starting point, we can loop through the set of blocks in cluster_blocks. For each block that belongs in the cluster, we'll add it to the cluster, set the block's cluster to this cluster, add all of the block's neighbors to the list of cluster_neighbors, and then return the set of neighboring blocks that should be added to this cluster. Again, these cluster_blocks are removed from the list of cluster_neighbors, and then the loop goes around again. If you think of how this function works on a large cluster, it will start with one block in the cluster, and the set of cluster_blocks and cluster_neighbors will keep expanding until all matching blocks are added to the cluster, and the list of neighbors consists of all blocks bordering the cluster. The blocks that were added to the cluster need to be removed from the cluster_neighbors at the end because some will have been re-added to that list during the loop iterations.

To complete the description of findClusterNeighbors(), we should look at the utility functions getNeighbors() and selectClusterBlocks():

function getNeighbors(block) {

var neighbors = [];

var i = block.position;

if (i % grid_length > 0) {

neighbors.push(i - 1);

}

if (i % grid_length + 1 < grid_length) {

neighbors.push(i + 1);

}

if (i - grid_length > 0) {

neighbors.push(i - grid_length);

}

if (i + grid_length + 1 < grid_length * grid_height) {

neighbors.push(i + grid_length);

}

return neighbors;

};

function selectClusterBlocks(neighbors) {

return _.filter(neighbors, function(pos) {

return blocks[pos].cluster == null &&

blocks[pos].color === block.color;

});

};With those utility functions out of the way, we're ready to look at recursively creating the rest of the clusters with createNeighboringClusters():

function createNeighboringClusters(cluster_neighbors) {

_.each(cluster_neighbors, function(pos) {

if (blocks[pos].cluster == null) {

clusters.push(new Cluster(blocks[pos]));

}

if (!_.contains(that.neighbors, blocks[pos].cluster)) {

blocks[pos].cluster.neighbors.push(that);

that.neighbors.push(blocks[pos].cluster);

}

});

}Putting Clusters to Use

Whew, okay, still with me? Now that we have this enhanced data structure, we need a way to put it to use by marking whole clusters of blocks as dead or marking them with the move number for the greedy look-ahead algorithm. We can accomplish this task with a function that's very similar to how we found and marked blocks before:

this.markNeighbors = function(color, check_move) {

_.each(that.neighbors, function (neighbor) {

_block_inspect_counter += 1;

var block = neighbor.blocks[0];

if (block.color === color &&

!block.isDead &&

block.marked === 0) {

_.each(neighbor.blocks, function(block) {

if (check_move > 0) {

block.marked = check_move;

} else {

block.isDead = true;

$('#block' + block.position).css('background-color', '#d9d9d9');

}

});

}

}); this.checkGameBoard = function(check_move) {

var color = this.color;

_.each(blocks, function (block) {

if (block.marked >= check_move) {

block.marked = 0;

}

});

// New search using clusters

_.each(clusters, function (cluster) {

var block = cluster.blocks[0];

if (block.isDead || (block.marked < check_move &&

block.marked !== 0)) {

cluster.markNeighbors(color, check_move);

}

});

return _.filter(blocks, function (block) {

return (0 !== block.marked);

}).length;

} this.markNeighbors = function(color, check_move) {

_.each(that.neighbors, function (neighbor) {

_block_inspect_counter += 1;

var block = neighbor.blocks[0];

if (that.blocks[0].marked < block.marked && block.marked < check_move) {

block.cluster.markNeighbors(color, check_move);

} else if (block.color === color && !block.isDead && block.marked === 0) {

_.each(neighbor.blocks, function(block) {

if (check_move > 0) {

block.marked = check_move;

} else {

block.isDead = true;

$('#block' + block.position).css('background-color', '#d9d9d9');

}

});

if (check_move === 0) {

that.blocks = _.union(that.blocks, neighbor.blocks);

that.neighbors = _.union(_.without(that.neighbors, neighbor), _.without(neighbor.neighbors, that));

_.each(neighbor.neighbors, function (next_neighbor) {

next_neighbor.neighbors = _.without(next_neighbor.neighbors, neighbor);

})

}

}

});The other additional chunk of code at the end of the else-if branch actually merges matching clusters with the dead pool of blocks to make a growing set of blocks all marked dead. This dead pool can be accessed immediately with blocks[0].cluster, and it allows for extremely efficient searching one move deep because all the neighbors of the dead pool are the clusters we're interested in for the next move. Note that this merge is only a partial merge. Neighboring blocks are added to the dead pool list of blocks, and the neighbor links are carefully updated so that the dead pool's list of neighbors is updated and the newly dead cluster's neighbors now point to the dead pool. However, the blocks and neighbors of the newly dead cluster are not updated, since they don't need to be. These clusters will never be directly referenced again, so we don't need to waste time updating them.

With that addition to markNeighbors(), we can dramatically simplify our search algorithm:

this.checkGameBoard = function(check_move) {

_.each(blocks, function (block) {

if (block.marked >= check_move) {

block.marked = 0;

}

});

blocks[0].cluster.markNeighbors(this.color, check_move);

return _.filter(blocks, function (block) {

return (0 !== block.marked);

}).length;

}I think we're ready to put the cluster search method to work and see how well the greedy look-ahead-by-5 algorithm performs:

Well, that's surprising. It doesn't seem to do much better at all. Comparing it with the rest of the algorithms, we get this:

| Algorithm | Min | Mean | Max | Stdev |

|---|---|---|---|---|

| Round Robin | 37 | 48.3 | 62 | 4.5 |

| RR with Skipping | 37 | 46.9 | 59 | 4.1 |

| Random Choice | 60 | 80.2 | 115 | 10.5 |

| Random with Skipping | 43 | 53.1 | 64 | 4.5 |

| Greedy | 31 | 39.8 | 48 | 3.5 |

| Greedy Look-Ahead-2 | 28 | 37.0 | 45 | 3.1 |

| Greedy Look-Ahead-3 | 25 | 34.2 | 40 | 2.7 |

| Greedy Look-Ahead-4 | 25 | 33.3 | 39 | 2.6 |

| Greedy Look-Ahead-5 | 25 | 33.1 | 41 | 2.8 |

On average, the look-ahead-by-5 run only did slightly better than look-ahead-by-4, and it even did worse in at least one case, giving it a maximum number of moves of 41 instead of 39. It may be the case that looking ahead that many moves causes certain paths in the move order to be picked that look like they'll be better, but in the long run these choices turn out to be slightly worse than making a choice with less information. At any rate, it looks like we're reaching the limits of the greedy look-ahead algorithm because looking much further ahead will take an inordinate amount of time, and we probably have to look much further ahead to get additional gains from the algorithm.

In the process of expanding the greedy look-ahead algorithm we've discovered a few things. First, looking ahead up to 3 moves is quite beneficial to the greedy algorithm. We were able to regularly break the 30-move barrier, and we improved the average performance from about 40 moves to 34 moves.

Second, we ran into significant diminishing returns beyond looking 3 moves ahead while incurring exponentially rising costs in run time. Going from 3 moves ahead to 5 moves ahead only decreased the average number of moves by one, but increased the running time by a factor of six. Trying to push the algorithm further will just run into more extreme exponential growth in run time with potentially no gains in reducing the number of moves.

Finally, we could increase the speed of the color matching algorithm substantially by using a more intelligent data structure that took advantage of the features of the game. Grouping blocks into clusters when each board was created allowed us to make a more efficient search algorithm, but in the end it only delayed the inevitable slowness that results from the exponential growth of looking more moves ahead. We could find other optimizations, but each order-of-magnitude improvement would only allow us to look one or two more moves ahead and there just aren't that many order-of-magnitude optimizations to the color matching algorithm. The real limitation is in the greedy look-ahead algorithm itself, so next time we will move on to exploring other options and see how they do.

Article Index

Part 1: Introduction & Setup

Part 2: Tooling & Round-Robin

Part 3: Random & Skipping

Part 4: The Greedy Algorithm

Part 5: Greedy Look Ahead

Part 6: Heuristics & Hybrids

Part 7: Breadth-First Search

Part 8: Depth-First Search

Part 9: Dijkstra's Algorithm

Part 10: Dijkstra's Hybrids

Part 11: Priority Queues

Part 12: Summary

No comments:

Post a Comment